Table of content

Throughout history, perspective in the data domain has experienced multiple transformations. Due to the recent advances made in machine learning, the data management processes of organizations have started to reform like never before. The exponential growth of available and accessible data demands the modern management and handling of immense data assets. The end-to-end routes of data architecture are known as pipelines. Every pipeline possesses one or more sources and target systems to access and manipulate the available data.

In these pipelines, data goes through various stages, including transformation, validation, normalization, etc. People often confuse the ETL Pipeline with Data Pipeline.This blog post is intended to answer two questions.

- What is the difference between the ETL Pipeline with Data Pipeline?

- How to make an ETL Pipeline?

ETL Pipeline

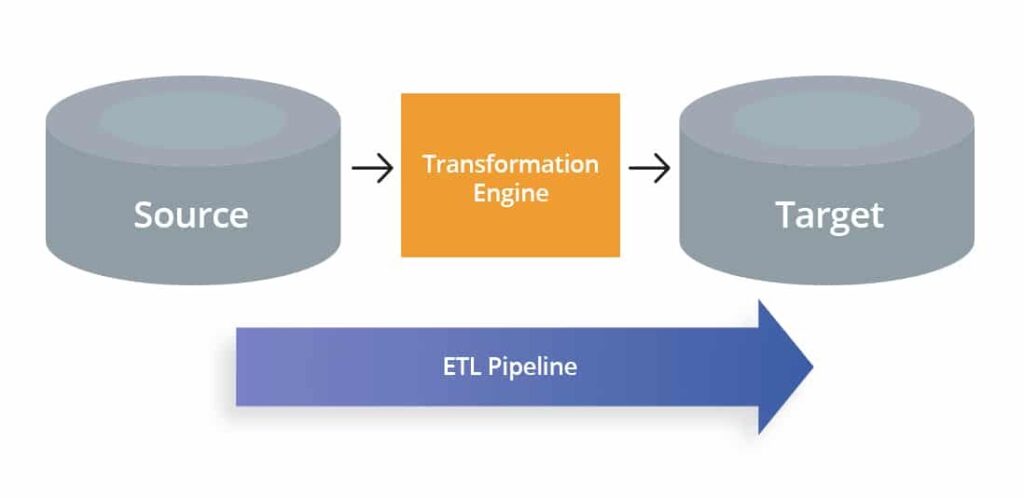

Data ETL Pipelines are architectures that involve certain processes, including the extraction of data from a source, its transformation, and then loading it into the target destination for different purposes like machine learning, statistical modeling, extracting insights, etc. The said target destination could be a data warehouse, data mart, or database.

ETL stands for Extraction, Transformation, and Loading. As the title suggests, the ETL process involves:

- Data integration

- Data warehousing

- Data Transformation

The extraction involves the fetching up of data from different heterogeneous sources. For instance, business systems, applications, sensors, and databanks. The next stage is data transformation that involves converting into a defined and improved format to use by many applications. Last but not the least, the accessible and improvised form of data finally loads into a target destination. The primary objective of building an ETL Pipeline is to employ the right data, make it available for reporting, and store it for instant and handy access. An ETL tool assists businesses and developers to spare time and effort to focus on core business processes. There exists a variety of strategies to build ETL pipelines depending on a businesses’ unique requirements.

ETL Pipeline - Use Case

There are a variety of scenarios where ETL pipelines can be used to deliver faster, superior-quality decisions. Data ETL pipelines are implemented to centralize all data sources and allow businesses to have a consolidated data version. Consider the Customer Resource Management (CRM) department that uses an ETL pipeline to extract customers’ data from multiple touchpoints during the purchase process. It can also allow the department to develop comprehensive dashboards that can serve as a single source containing customer information from different sources. Similarly, it often becomes essential for the companies to internally transit and transforms data between multiple data shelves. For instance, if data is stored in different intelligence systems, it becomes difficult for a business user to drive clear insights and make rational decisions.

Data Pipeline

A data pipeline is an architecture that involves moving data from the source to the target destination. These steps involve copying and loading data from an onsite location into the cloud or merging it with other data sources. The primary objective of a data pipeline is to make sure that all this transition process is applied consistently to all available data.

If handled properly, a data pipeline allows businesses to access consistent and well-organized data for further processing. By practicing data transfer and transformation, data engineers will fetch information from various sources rationally.

Data Pipeline - Use Case

Data pipelines are helpful for accurately extracting and driving useful data insights. The methodology works well for businesses or companies that store and depend on multiple, huge chunks of data sources, perform real-time data analysis, and have their data stored on the cloud. For instance, data pipeline tools and methodologies perform predictive analysis to filter the most probable future trends from the least probable ones. A production department can perform predictive analytics to determine if the raw material is likely to run out. It could also allow making forecasts about the possible delays in a supply line. In this way, these insights can help the production department handle its operations free from any resistance or errors.

Difference between ETL Pipelines and Data Pipelines

Although ETL and data pipelines are closely related concepts, they have multiple differences; however, people often use the two terms interchangeably. Data pipelines and ETL pipelines are both designated to shift data from one source to another; the main difference is the application for which the pipeline is designed, a detail of which is discussed in the following article.

- The difference of terminology between ETL pipeline & data pipeline

ETL pipeline possesses a series of mechanisms that fetch data from a source, transform it, and load it into the target destination. Whereas a data pipeline is a kind of broader terminology with ETL pipeline as its subset. It lacks the transformation phase and only includes transferring data from a source to the target destination.

- Purpose of ETL pipeline VS data pipeline

In a simpler means, a data pipeline is intended to transfer data from sources, such as business processes, applications, and sensors, etc., into a data warehouse to run intelligent and analytical processes. On the other hand, ETL pipeline, as the name suggests, is a specific kind of data pipeline in which data is extracted, transformed, and then loaded into a target destination. After extracting data from the source, the critical step is to adjust this data into a designated data model that’s designed following the specific business intelligence requirements. This adjustment includes accumulation, cleaning, and transformation of the data. In the end, the resulting data is then loaded into the target system.

- Differences in how ETL and data pipeline run

An ETL pipeline operates to fetch data in batches, which moves a certain amount of data to the target system. These batches can be organized in such a way as to run at a specific time daily when incase of low system traffic. On the other hand, a data pipeline doesn’t stockpile from the source and can be deployed as a real-time process by ensuring every event must be handled as soon it happens instead of batches. For instance, to transfer data coming from an air traffic control (ATC) system. Moreover, the data pipeline doesn’t require adjusting data before loading it into a database or a data warehouse. This data can be loaded into any destination system, such as the Amazon Web Services bucket.

How to Build an ETL Process

When you build an ETL infrastructure, you must first gather and combine data from many sources. Then you are required to carefully outline the strategy and test to ensure error-free transfer of data. This is a lengthy and complex process.

Let’s discuss in detail how.

Building an ETL Pipeline for Batch Processing

As discussed earlier in an ETL pipeline, you handle data in batches from source databases to a target destination (a data lake or warehouse). It’s a complicated task to build an enterprise ETL architecture from scratch. Data engineers usually use ETL tools such as Stitch or Blendo, each serving as a simplifier and automating much of your tasks.To develop an ETL pipeline using batch processing, you are required to:

- Create a dataset of the primary key (Unique Variable)

Create a dataset that possesses the set of permitted variables and values your data may contain. For instance, in air traffic control data, specify the flight numbers or flight designator allowed.

- Extract data from multiple sources

The foundations of successful ETL are based on the correct extraction of data. Fetch data from various sources, such as Apps Data, DBMSm RDBMS, XML, CSV files, and transform it into a single format for mutual processing as per standards.

- Validate data

Filter the data with values in the expected ranges from the rest. For instance, if you only want cars record from the last decade, reject any older than ten years. Analyze abandoned records on an ongoing basis, outline issues, adjust the source data, and enhance the extraction process to resolve the issues that can lead to future batches.

- Transform data

Eliminate duplicate data, apply filters ensuring business rules, ensure data integrity (to refrain from losing any data), and create aggregates as necessary. To do so, you need to implement numerous functions to automate the transformation of data.

- Stage data

You cannot typically load transformed data directly into the target destination. Instead, data is first injected into a staging database, making it easier to reverse any change if something goes wrong. This is where you can produce audit reports for regulatory purposes and perform diagnoses to repair any problem.

- Publish to your target system

While loading data to the target database, some data warehouses overwrite existing information upon loading a new batch. These overwrites may occur daily, weekly, or monthly. In other cases, the ETL process can add new data without overwriting the old one, assigning a time flag to indicate it is updated or recent. This practice needs to be handled carefully to secure the data warehouse from overflowing due to disk space.

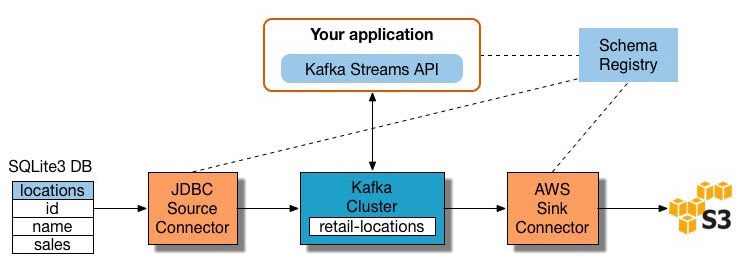

2. Building an ETL Pipeline for Stream Processing

Modern practices involve data-time processing, such as web analytics data from a large e-commerce website. As discussed earlier, you cannot extract and transform data in large batches, but instead, it requires performing ETL on data streams. As soon as client applications write data to the data source, you must clean and transform it while transitioning between source and destination. Different stream processing tools are available, including Apache Samza, Apache Storm, and Apache Kafka. The illustration below showcases an ETL pipeline based on Kafka (S3 Sink Connector to stream the data to Amazon S3).

(Source - Confluent)

To create a stream processing ETL pipeline using Apache Kafka, you are required to:

- Extract data into Kafka Topics

Java Database Connectivity (JDBC) is an application programming interface (API) for Java's programming language. Here, the JDBC connector attracts each source table row and feeds it into a key/value pair into a Kafka topic as message feeds. Kafka’s organized message feeds into categories called topics. Each topic has a title that is unique across the entire Kafka cluster. Applications interested in the state of this table read from this topic. As client applications add rows to the source table, Kafka automatically updates them as new messages to the Kafka topic, allowing a real-time data stream.

- Pull data from Kafka topics

The ETL application fetches messages from the Kafka topic in Avro records, creates an Avro schema file, and deserializes them. Deserialization does the opposite of serialization by converting bytes of arrays into the desired data type. Then it produces KStream objects from the messages.

- Transform data in KStream objects

Using the Kafka Streams API, the stream processor receives a single record, processes it, and generates one or more output records for downstream process handlers. These process handlers can transform one message, filter them as per regulations, and perform different operations on many messages.

- Load data to other systems

The ETL application still possesses the enriched data and now requires to stream it into destination systems, such as a data warehouse or data lake. Amazon S3 or Amazon Simple Storage Service is a service provided by Amazon Web Services that allows object storage through a web service interface. In the diagram above, the S3 Sink Connector is used to stream the data to Amazon S3. PS: One can also integrate with other systems, such as a Redshift data warehouse using Amazon Kinesis Data Firehouse, integrated with Amazon S3, Amazon, and Amazon Elasticsearch Service. Now you know how to perform ETL processes the conventional way (Batch Process) and streaming data.

Conclusion

As you’ve seen, although used interchangeably, ETL and data Pipelines are two different architectures. While the ETL process involves data extraction, transformation, and loading, the data pipeline doesn’t necessarily include data transformation. Shifting data from source to target system enables various operators to query more systematically and correctly than possible instead of dealing with complex, diverse, and raw source data. A well-structured data pipeline and ETL pipeline improve the efficiency of data management and enable data managers to easily make instant iterations to fulfill the evolving data requirements of the business.